Abstract

LiDAR sensors are used in autonomous driving applications to accurately perceive the environment. However, they are affected by adverse weather conditions such as snow, fog, and rain. These everyday phenomena introduce unwanted noise into the measurements, severely degrading the performance of LiDAR-based perception systems.

In this work, we propose a framework for improving the robustness of LiDAR-based 3D object detectors against road spray. Our approach uses a state-of-the-art adverse weather detection network to filter out spray from the LiDAR point cloud, which is then used as input for the object detector. In this way, the detected objects are less affected by the adverse weather in the scene, resulting in a more accurate perception of the environment. In addition to adverse weather filtering, we explore the use of radar targets to further filter false positive detections. Tests on real-world data show that our approach improves the robustness to road spray of several popular 3D object detectors.

Method

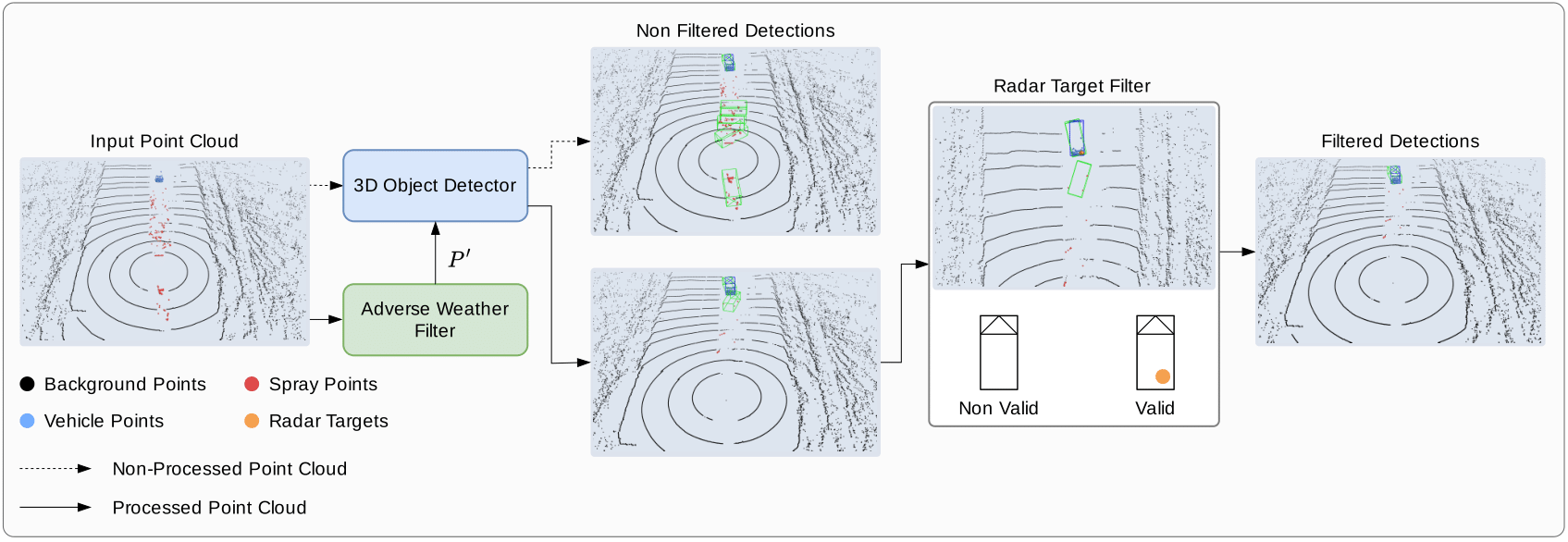

Overview of our proposed method. Given a LiDAR point cloud \(\boldsymbol{P}\), we first remove all adverse weather points and obtain a filtered point cloud \(\boldsymbol{P'}\) which is used as input for the object detector. Compared to directly using the unprocessed point cloud as input, the resulting detections are less affected by road spray. In a multi-sensor setup that includes radar, we use its inherent robustness to adverse weather to further filter out false positive detections by checking if a detected object has an associated radar target. The point colors are used for visualization purposes only. We use blue boxes to represent the ground truth and green for object detection.

Results

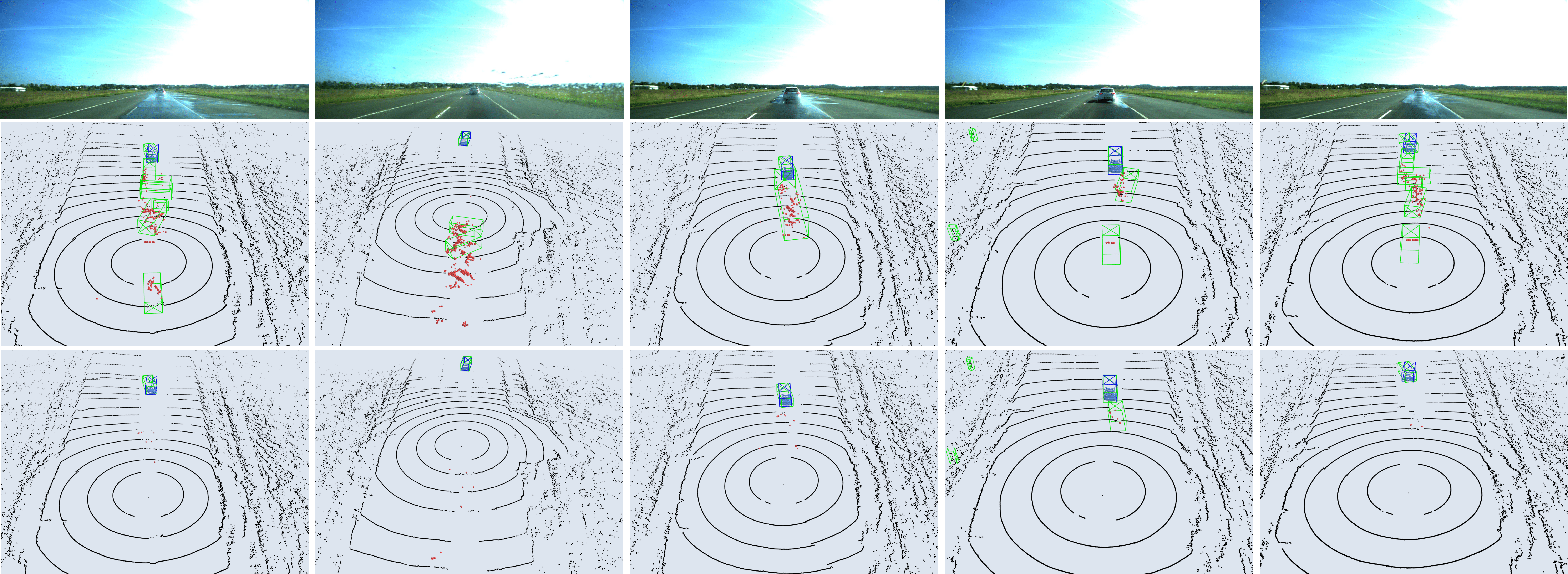

Qualitative results of the proposed adverse weather filtering approach. The top images show the camera image (used for visualization only). The middle figures show qualitative results of SECOND without the point cloud preprocessing. The bottom figures represent instead the results when using AWNet for adverse weather filtering. We show spray points in red and vehicle points in light blue. We use blue boxes to represent the ground truth and green for object detections.